publications

* denotes co-first authorship. Check also my Google Scholar profile.

2025

-

The physics of data and tasks: Theories of locality and compositionality in deep learningPhD Dissertation, École Polytechnique Fédérale de Lausanne (EPFL), 2025

The physics of data and tasks: Theories of locality and compositionality in deep learningPhD Dissertation, École Polytechnique Fédérale de Lausanne (EPFL), 2025 -

Bigger isn’t always memorizing: Early stopping overparameterized diffusion modelsarXiv preprint, 2025

Bigger isn’t always memorizing: Early stopping overparameterized diffusion modelsarXiv preprint, 2025 -

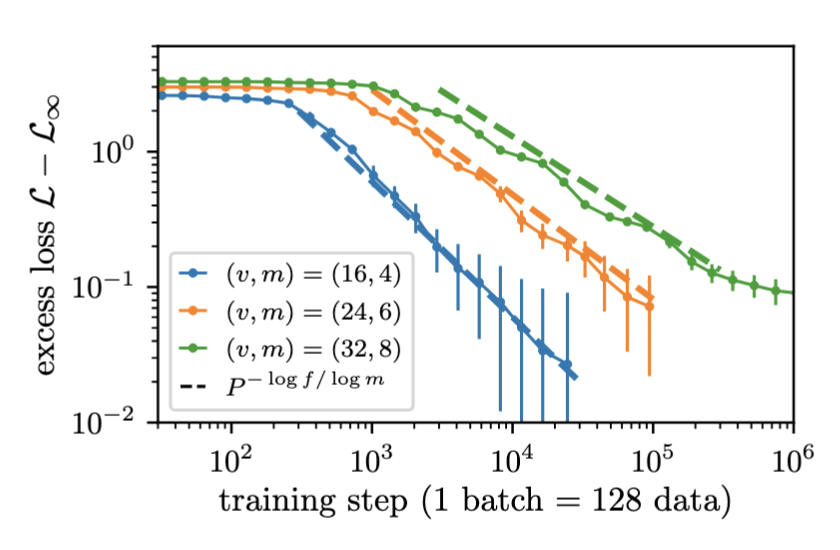

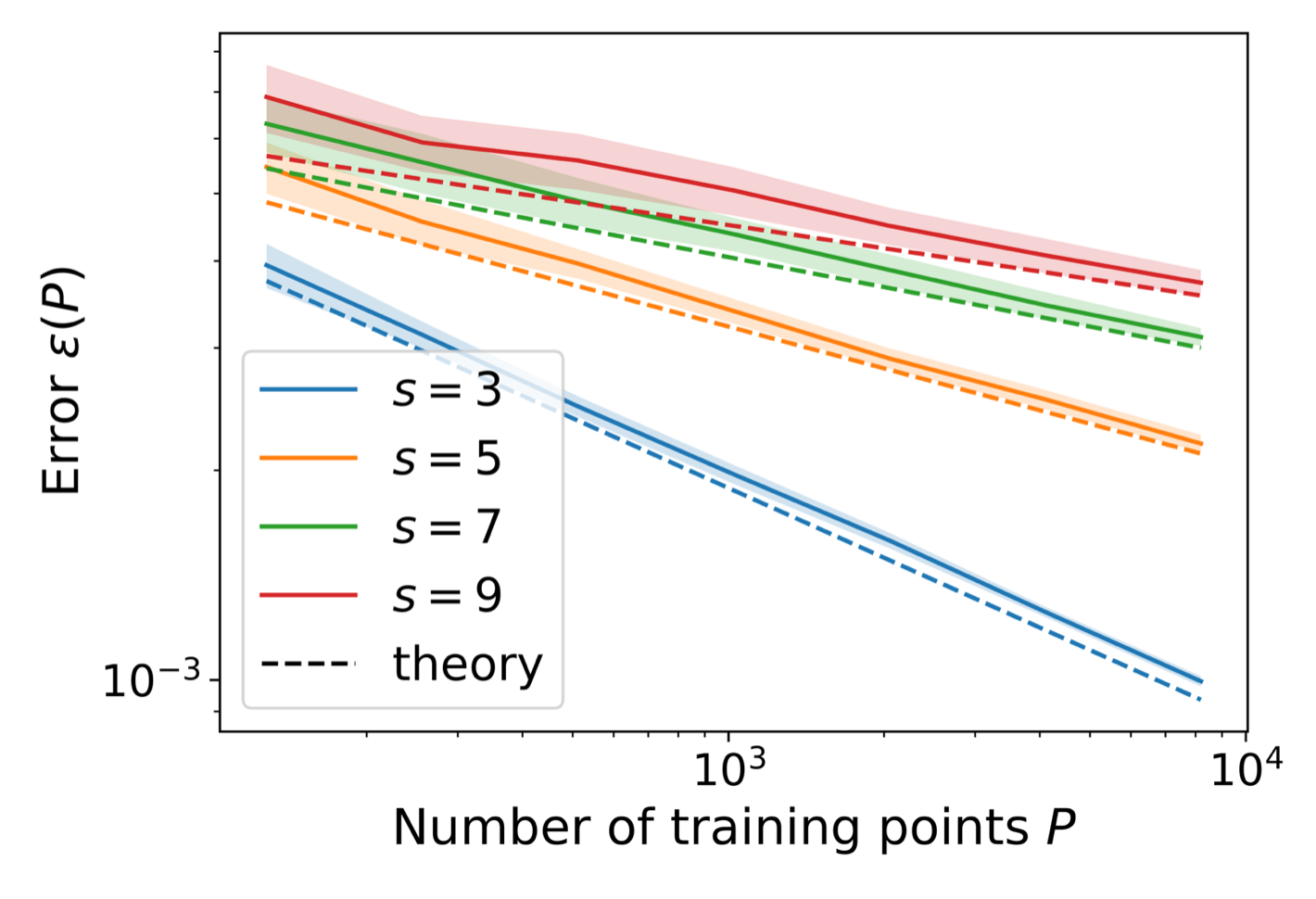

Scaling laws and representation learning in simple hierarchical languages: Transformers versus convolutional architecturesPhysical Review E, 2025

Scaling laws and representation learning in simple hierarchical languages: Transformers versus convolutional architecturesPhysical Review E, 2025 -

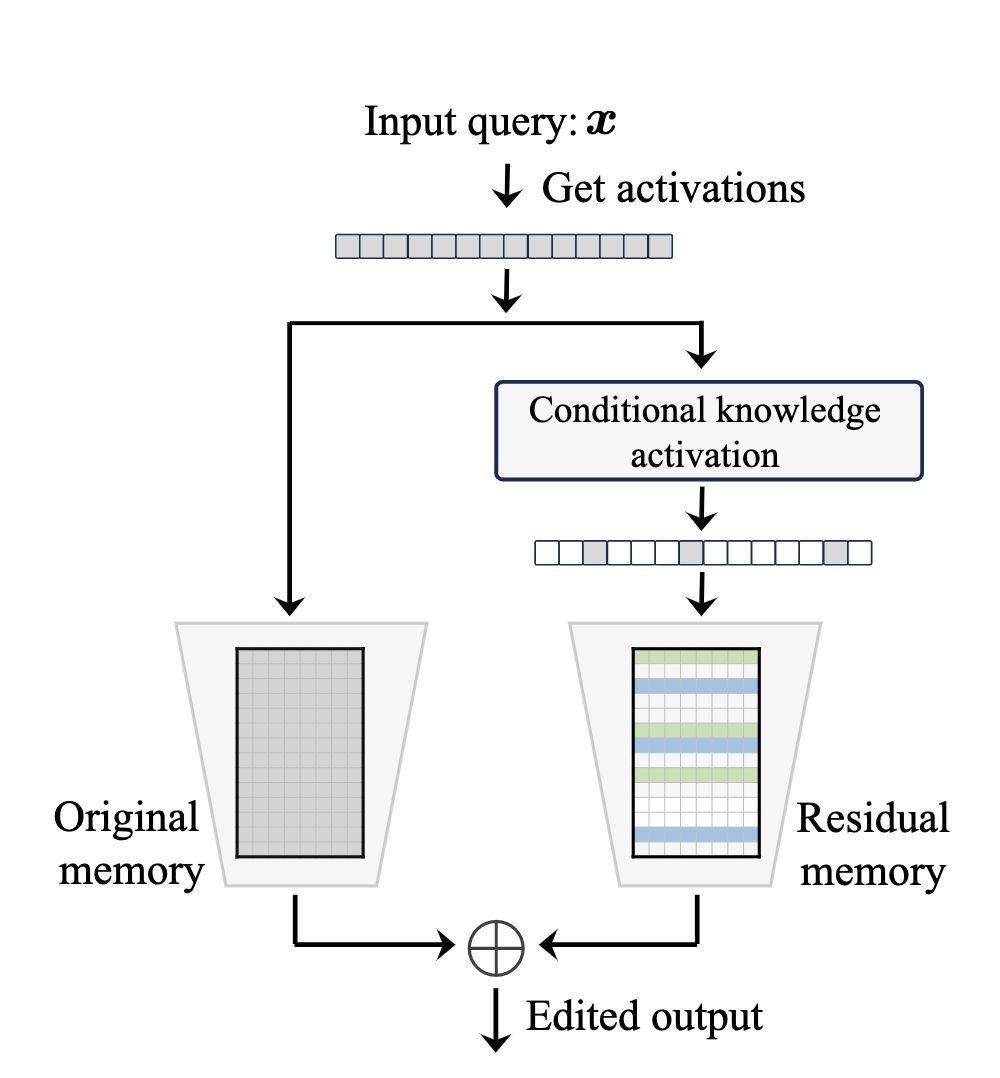

MEMOIR: Lifelong model editing with minimal overwrite and informed retention for LLMsAdvances in Neural Information Processing Systems (NeurIPS), 2025

MEMOIR: Lifelong model editing with minimal overwrite and informed retention for LLMsAdvances in Neural Information Processing Systems (NeurIPS), 2025 -

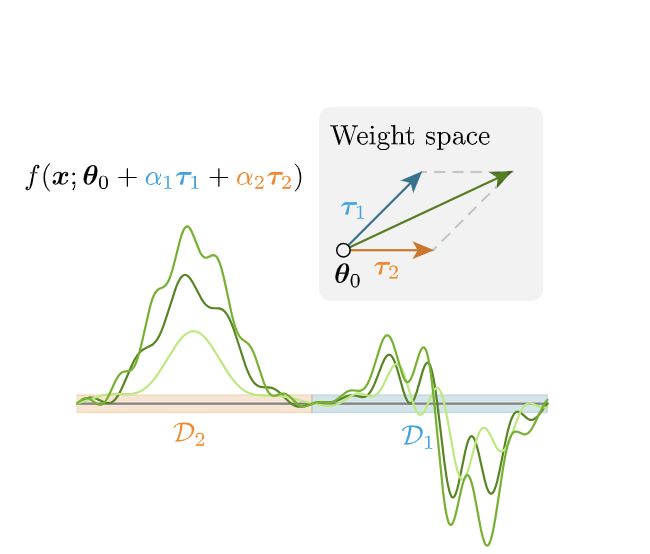

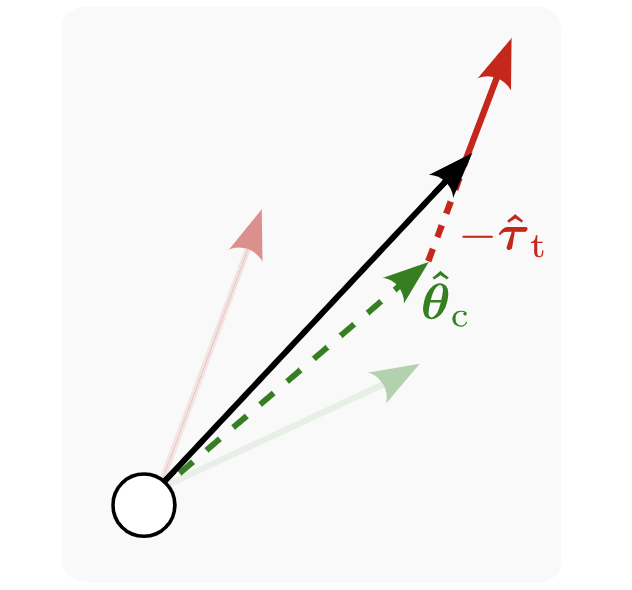

Backdoor unlearning through linear task decompositionICML 2025 Workshop on Machine Unlearning for Generative AI, 2025

Backdoor unlearning through linear task decompositionICML 2025 Workshop on Machine Unlearning for Generative AI, 2025 -

How compositional generalization and creativity improve as diffusion models are trainedInternational Conference on Machine Learning (ICML), PMLR, 2025

How compositional generalization and creativity improve as diffusion models are trainedInternational Conference on Machine Learning (ICML), PMLR, 2025 -

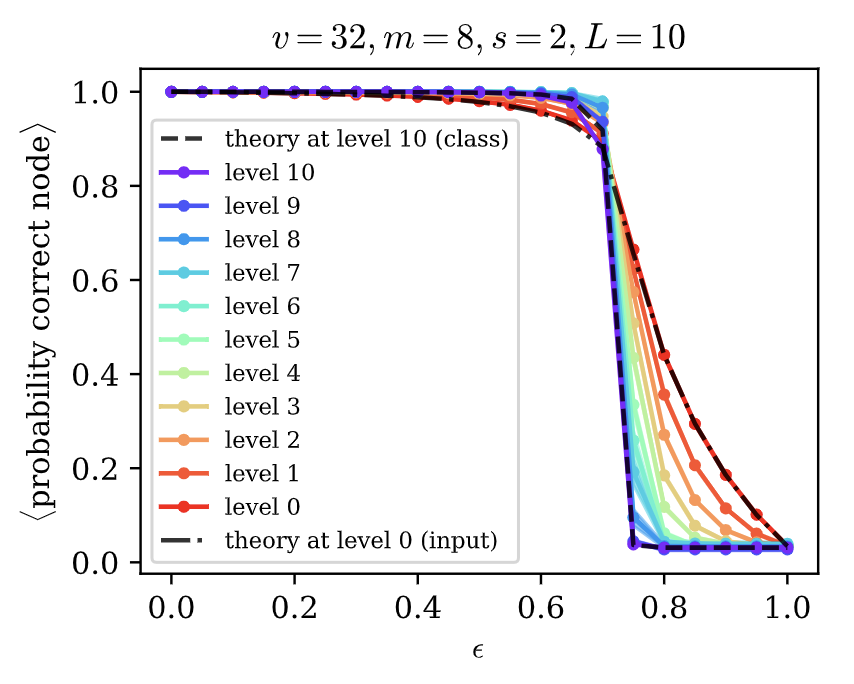

Probing the latent hierarchical structure of data via diffusion modelsInternational Conference on Learning Representations (ICLR), 2025

Probing the latent hierarchical structure of data via diffusion modelsInternational Conference on Learning Representations (ICLR), 2025 -

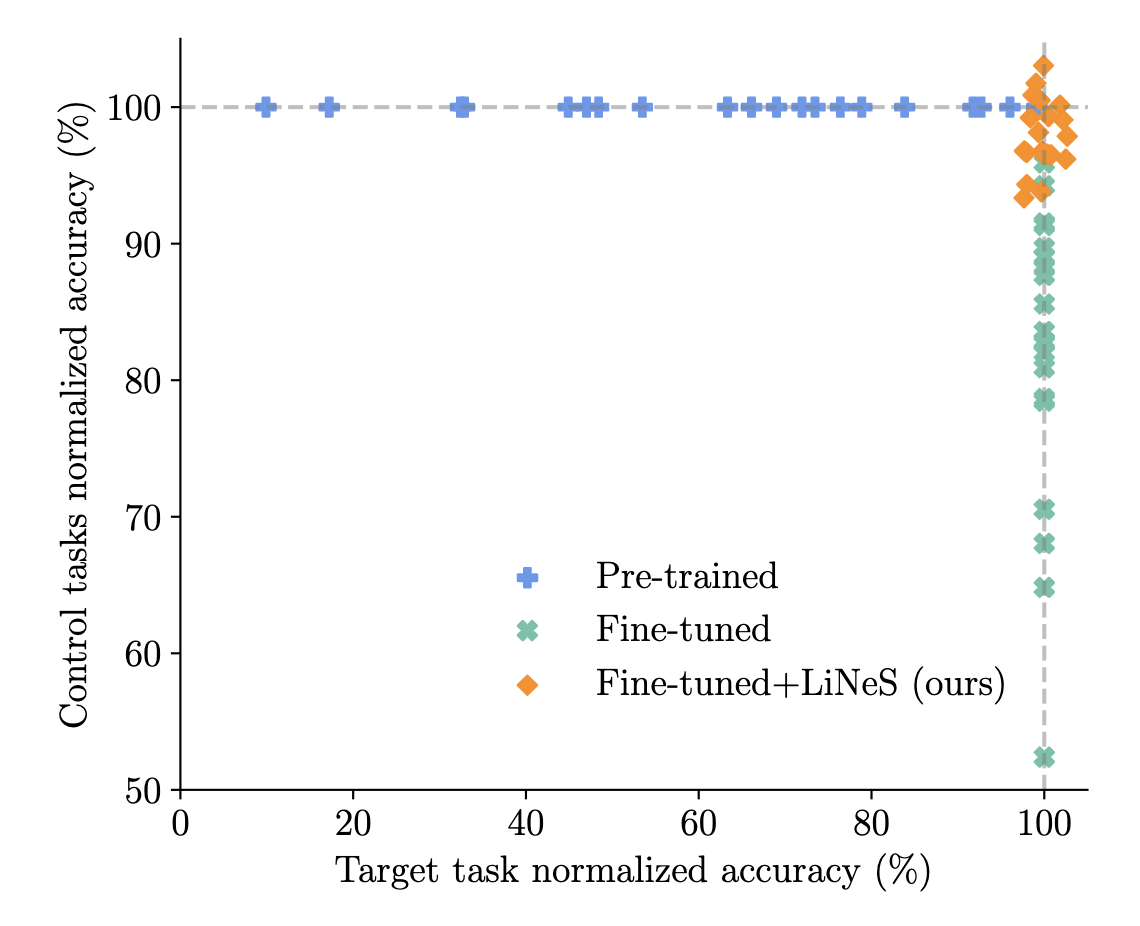

LiNeS: Post-training layer scaling prevents forgetting and enhances model mergingInternational Conference on Learning Representations (ICLR), 2025

LiNeS: Post-training layer scaling prevents forgetting and enhances model mergingInternational Conference on Learning Representations (ICLR), 2025 -

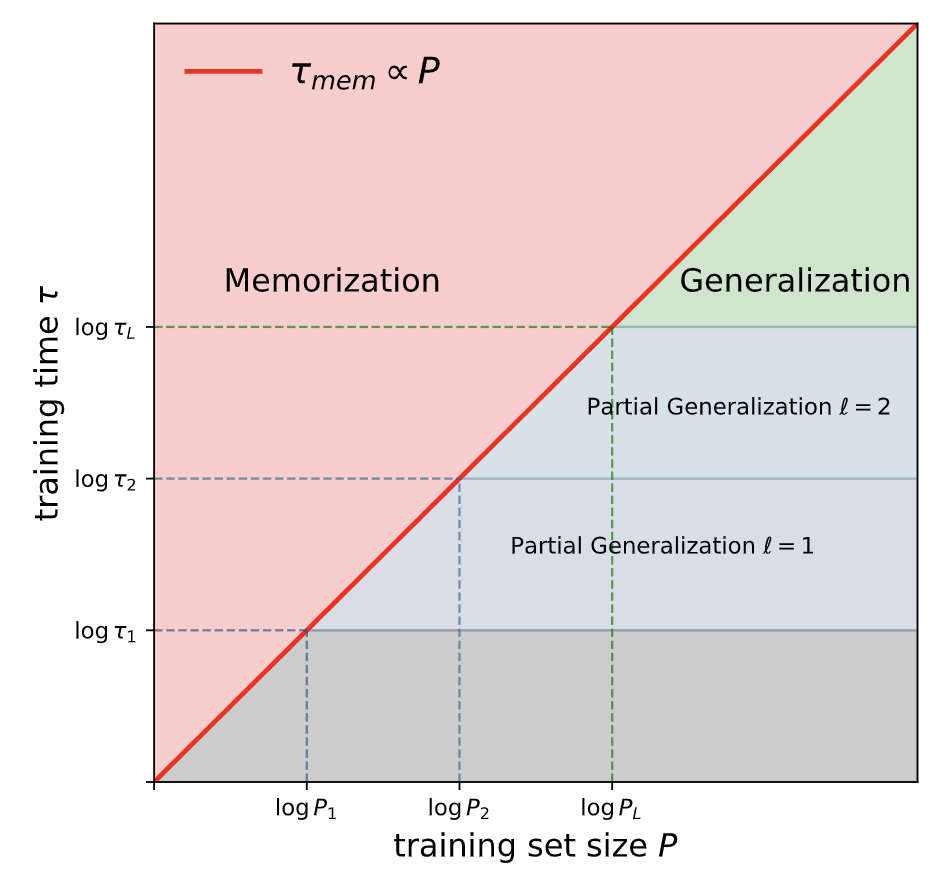

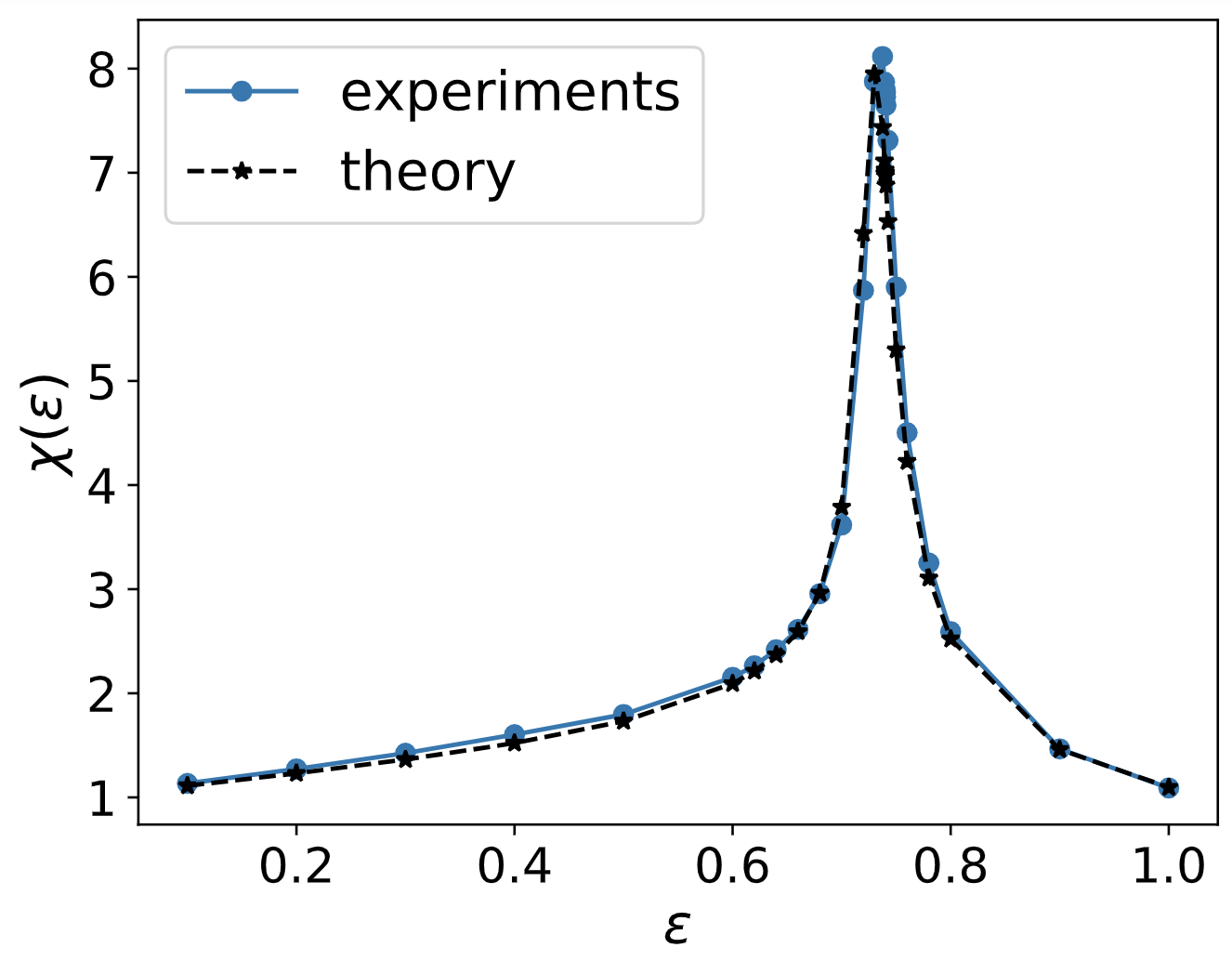

A phase transition in diffusion models reveals the hierarchical nature of dataProceedings of the National Academy of Sciences (PNAS), 2025

A phase transition in diffusion models reveals the hierarchical nature of dataProceedings of the National Academy of Sciences (PNAS), 2025

2024

-

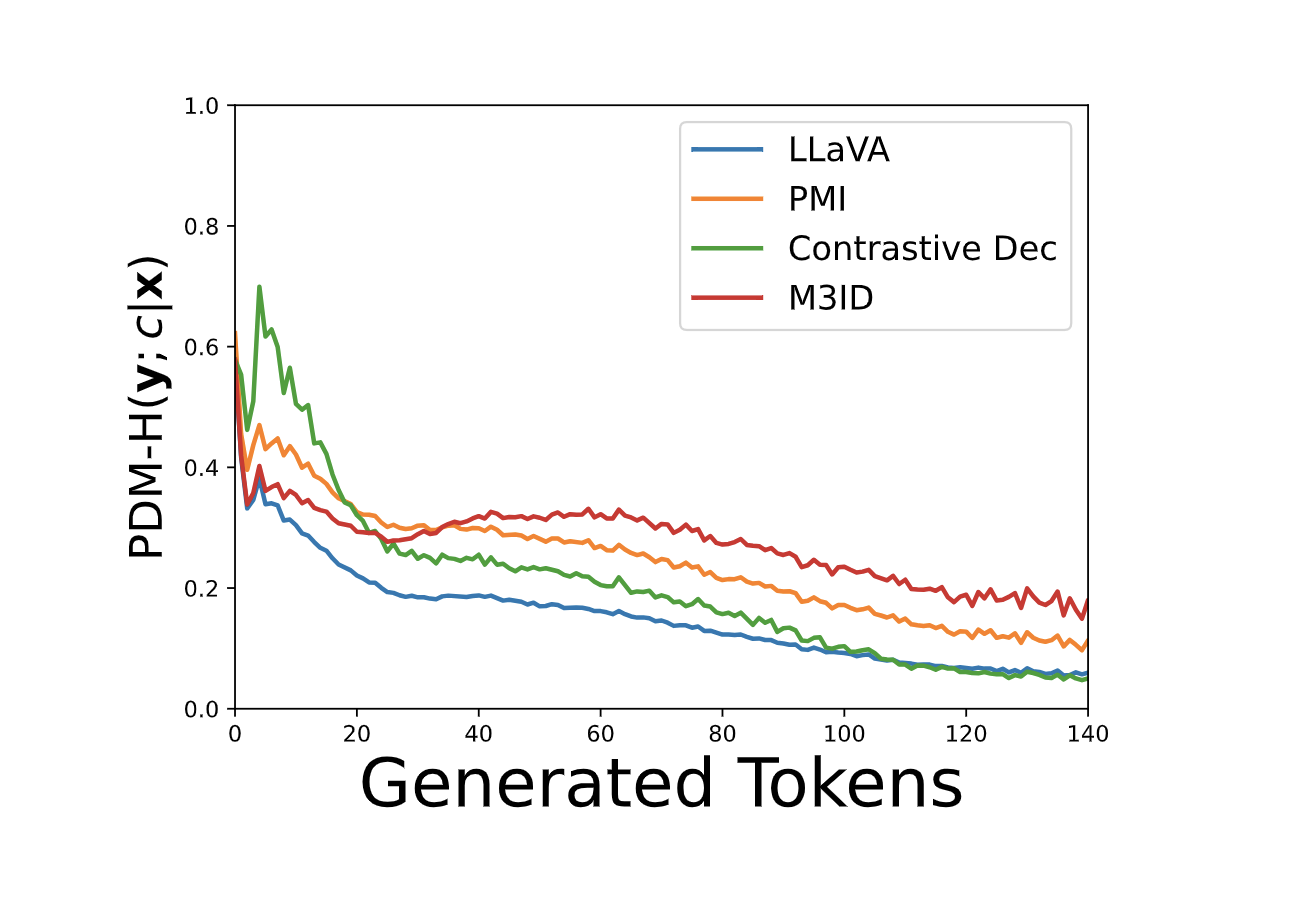

Multi-modal hallucination control by visual information groundingIEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2024

Multi-modal hallucination control by visual information groundingIEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2024 -

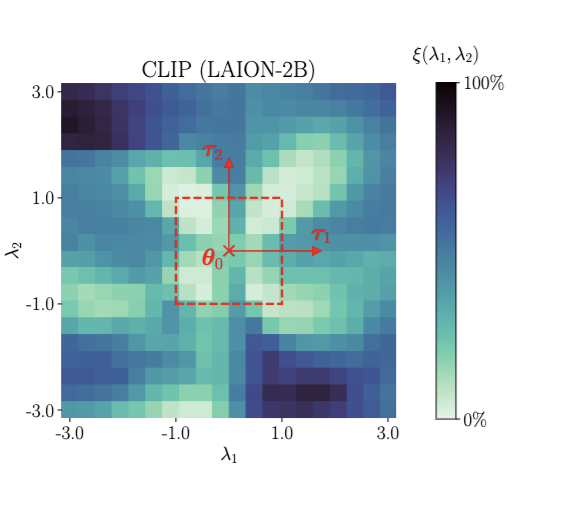

Task addition and weight disentanglement in closed-vocabulary modelsICML 2024 Efficient Systems for Foundation Models Workshop, 2024

Task addition and weight disentanglement in closed-vocabulary modelsICML 2024 Efficient Systems for Foundation Models Workshop, 2024 -

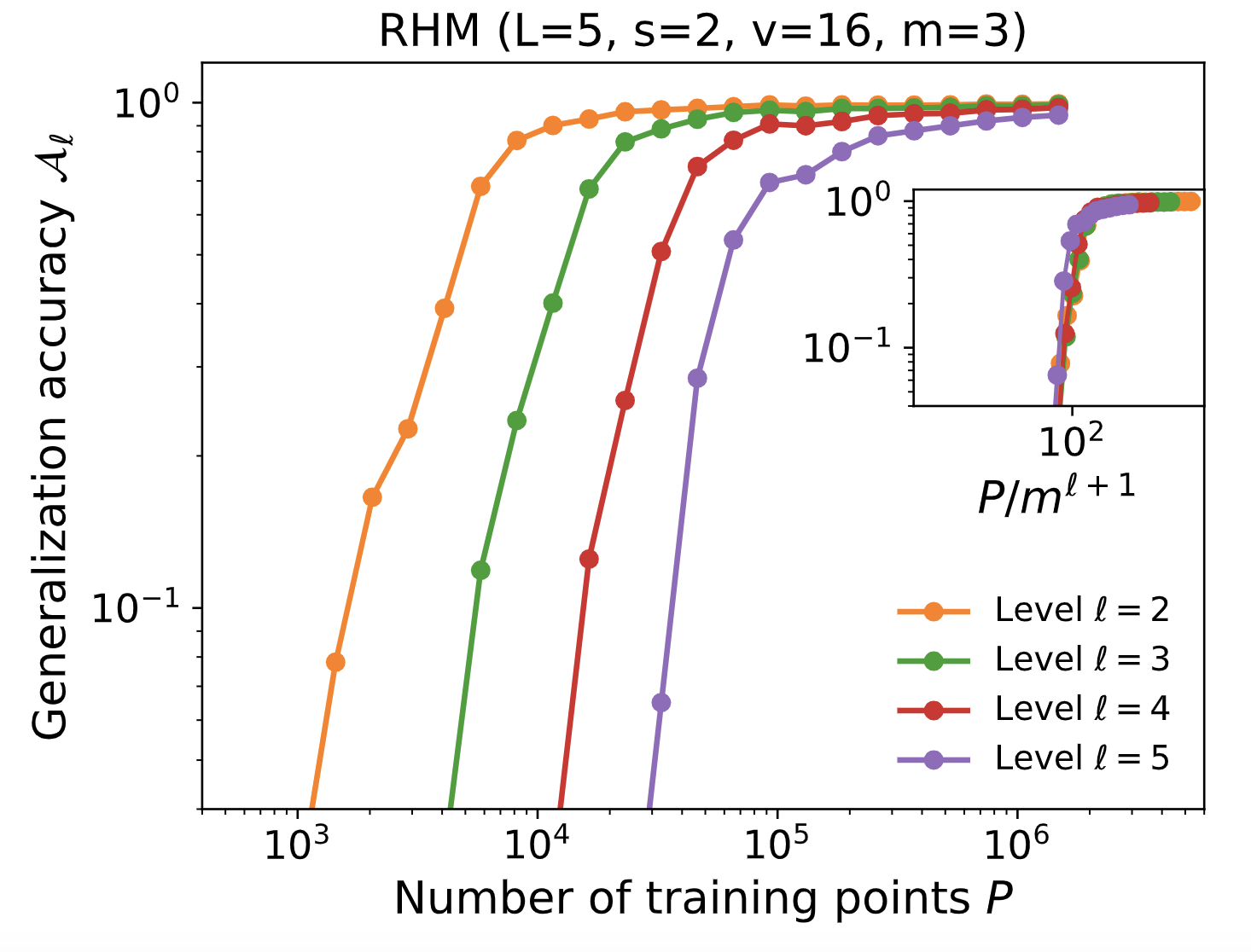

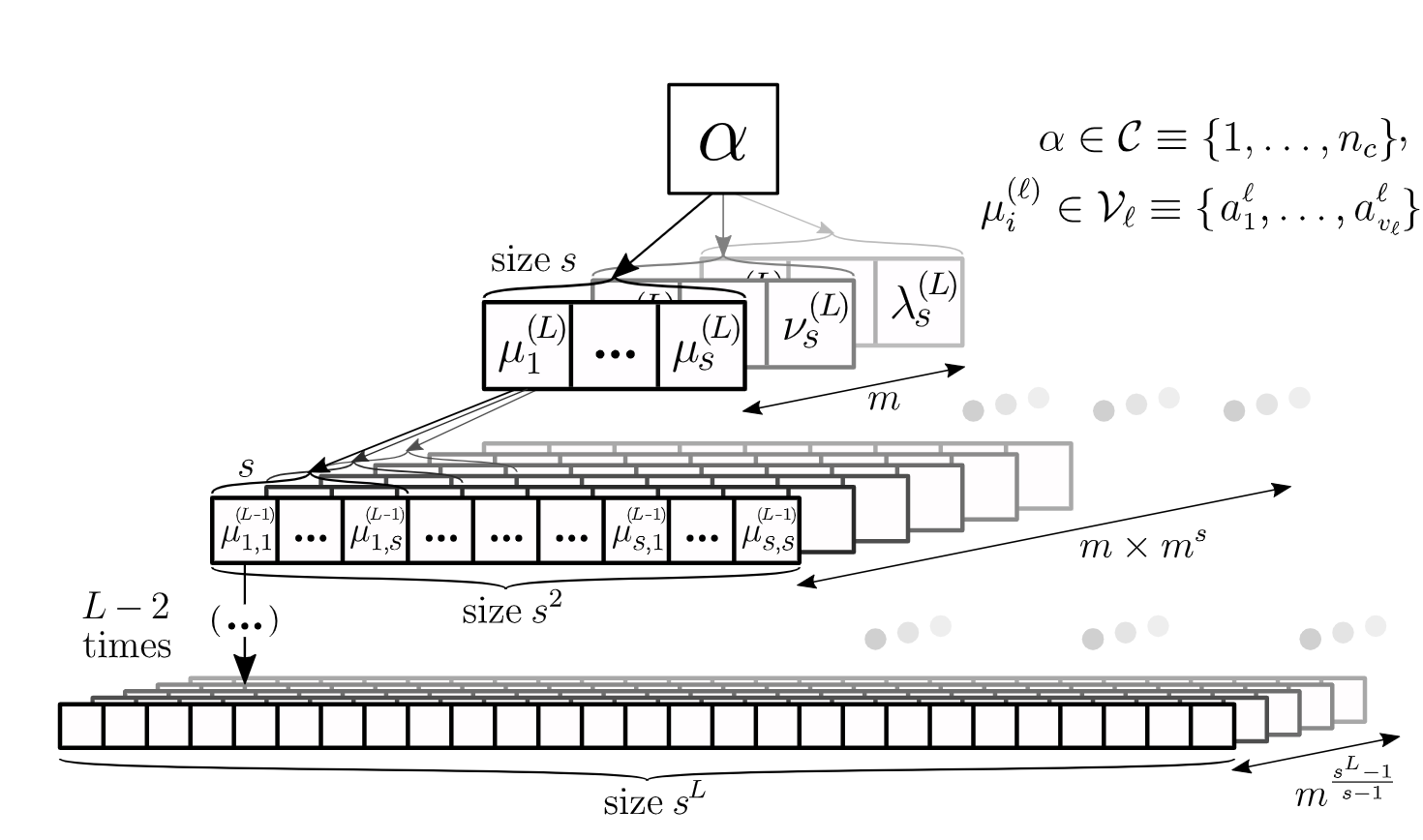

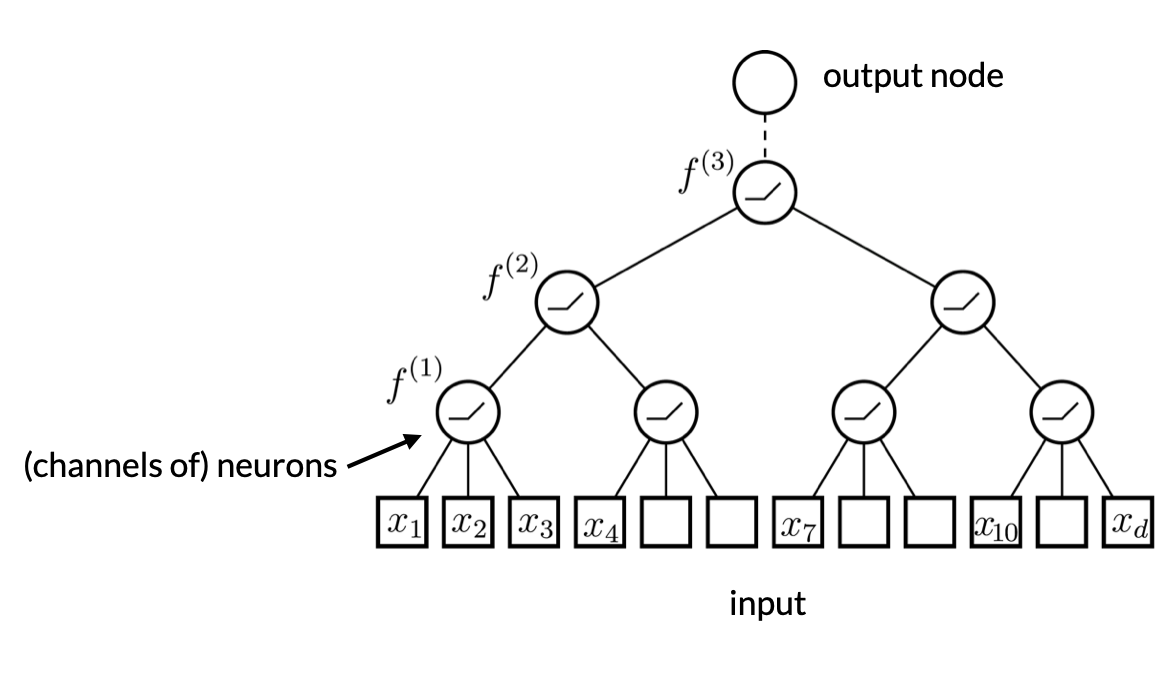

How deep neural networks learn compositional data: The Random Hierarchy ModelPhysical Review X, 2024

How deep neural networks learn compositional data: The Random Hierarchy ModelPhysical Review X, 2024 -

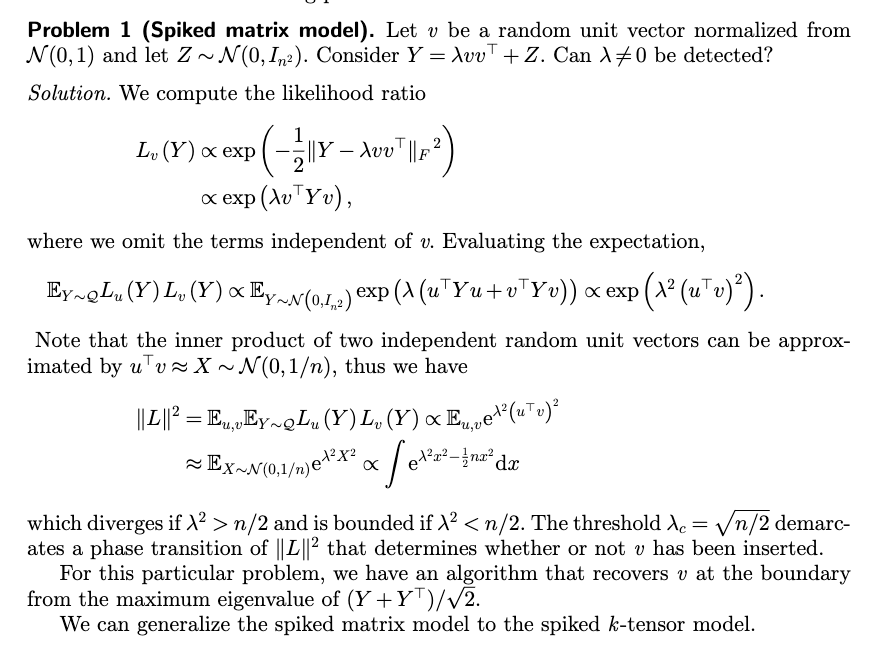

Computational complexity of deep learning: fundamental limitations and empirical phenomenaJournal of Statistical Mechanics: Theory and Experiment (JSTAT), 2024

Computational complexity of deep learning: fundamental limitations and empirical phenomenaJournal of Statistical Mechanics: Theory and Experiment (JSTAT), 2024

2023

-

What can be learnt with wide convolutional neural networks?International Conference on Machine Learning (ICML), PMLR, 2023

What can be learnt with wide convolutional neural networks?International Conference on Machine Learning (ICML), PMLR, 2023

2021

-

Locality defeats the curse of dimensionality in convolutional teacher-student scenariosAdvances in Neural Information Processing Systems (NeurIPS), 2021

Locality defeats the curse of dimensionality in convolutional teacher-student scenariosAdvances in Neural Information Processing Systems (NeurIPS), 2021 -

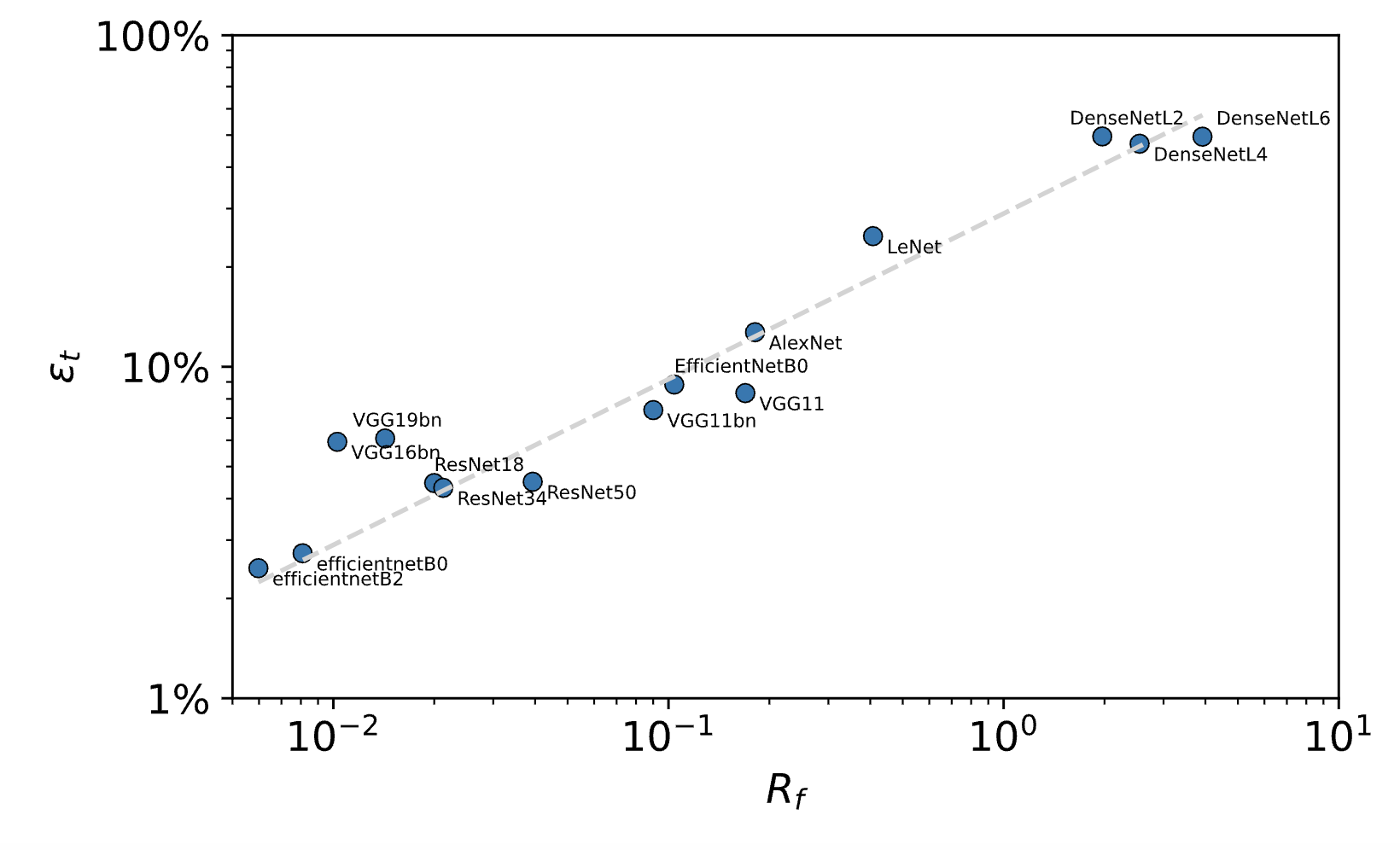

Relative stability toward diffeomorphisms indicates performance in deep netsAdvances in Neural Information Processing Systems (NeurIPS), 2021

Relative stability toward diffeomorphisms indicates performance in deep netsAdvances in Neural Information Processing Systems (NeurIPS), 2021

2020

-

Spectral analysis of infinitely wide convolutional neural networksMaster’s Thesis, Sorbonne Université and Politecnico di Torino, 2020

Spectral analysis of infinitely wide convolutional neural networksMaster’s Thesis, Sorbonne Université and Politecnico di Torino, 2020